Boundless Informant: NSA’s complex tool for classifying global intelligence

A new batch of classified NSA docs leaked to the media reveals the details of a comprehensive piece of software used by NSA to analyze and evaluate intelligence gathered across the globe as well as data extraction methods.

The top-secret documents released by the Guardian shed light on the National Security Agency’s data-mining tool being used for counting and categorizing metadata gathered and stored in numerous databases around the world.

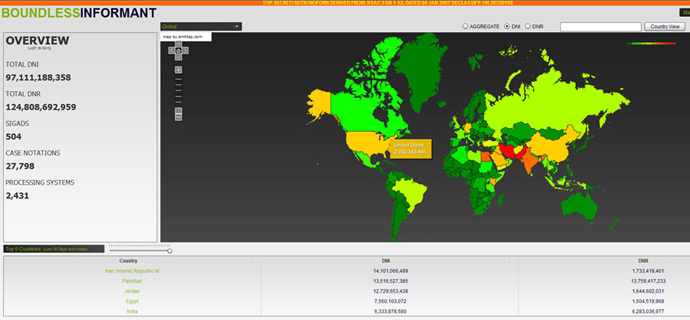

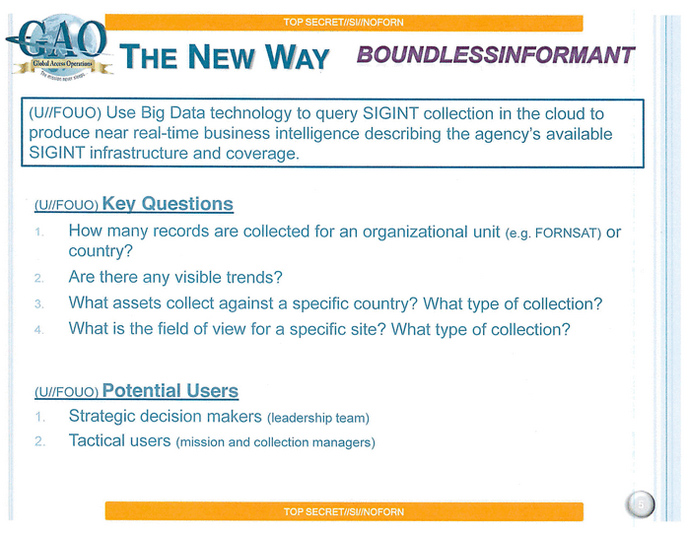

Known as Boundless Informant, the software provides its operator a graphical insight on how many records were collected for a specific “organizational unit” or country, what type of data was collected and what type of collection was used. The program also allows determining trends in data collection for both strategic and tactical decision making, according to the slides.

One of the slides contains a part of the Informant’s user interface showing a world map with countries color-coded ranging from green to red depending on the amount of records collected there. While Iran, Pakistan and other some other states are predictably “hottest” according to the map, the agency collected almost 3 billion intelligence pieces in the US in March 2013 alone.

The insight on the software being used by the NSA comes amid the agency spokesperson Judith Emmel’s claims that the NSA cannot at the moment determine how many Americans may be accidentally included in its surveillance.

“Current technology simply does not permit us to positively

identify all of the persons or locations associated with a given

communication,” Emmel said Saturday adding that “it is

harder to know the ultimate source or destination, or more

particularly the identity of the person represented by the TO:,

FROM: or CC: field of an e-mail address or the abstraction of an

IP address.”

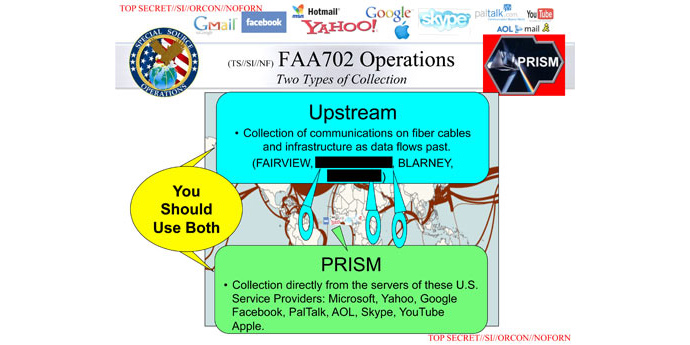

NSA data sources

Another slide from the internal NSA presentation redacted by the

Guardian editors details the data gathering methods used in the

NSA global surveillance program.

The first method suggests interception of data from “fiber cables and infrastructure as data flows past” under the FISA Amendments Act (FAA) of 2008, Section 702.

The second distinguished method is data collection “directly from the servers of the US service providers.”

The presentation encourages analysts to use both methods for better results.

Google, Facebook negotiated ‘secure portals’ to share data with NSA?

Meanwhile, a report by the New York Times revealed that Internet giants, including Google and Facebook, have been in negotiations with the US security agency over ‘digital rooms’ for sharing the requested data. The companies still insist there is no “back door” for a direct access to user data on their servers.

The Internet companies seem more compliant with the spy agencies than they want to appear to their users, and are cooperating on “behind-the-scenes transactions” of the private information, according to a report that cites anonymous sources “briefed on the negotiations.”

According to the report, Google, Microsoft, Yahoo, Facebook, AOL, Apple and Paltalk have “opened discussions with national security officials about developing technical methods to more efficiently and securely share the personal data of foreign users in response to lawful government requests,” sometimes “changing” their computer systems for this purpose.

These methods included a creation of “separate, secure portals” online, through which the government would conveniently request and acquire data from the companies.

Twitter was the only major Internet company mentioned in the report that allegedly declined to facilitate the data transfer to the NSA in a described way. As opposed to a legitimate FISA request, such a move was considered as not “a legal requirement” by Twitter.

The sources claim the negotiations have been actively going in the recent months, referring to a Silicon Valley visit of the chairman of the Joint Chiefs of Staff Martin E. Dempsey. Dempsey is said to have met the executives of Facebook, Microsoft, Google and Intel to secretly discuss their collaboration on the government’s “intelligence-gathering efforts.”

NSA pressured to declassify more PRISM details

In response to the fury over US government’s counterterrorism techniques, Director of National Intelligence James Clapper for the second time in three days revealed some details of the PRISM data-scouring program.

Being one of the “most important tools for the protection of the nation's security” the PRISM is an internal government computer system for collecting “foreign intelligence information from electronic communication service providers under court supervision,” Clapper said.

He also said that PRISM seeks foreign intelligence information concerning foreign targets located outside the US and cannot intentionally target any US citizen or any person known to be in the US. As for “incidentally intercepted” information about a US resident, the dissemination of such data is prohibited unless it is “evidence of a crime”, “indicates” a serious threat, or is needed to “understand foreign intelligence or assess its importance.”

Clapper also stressed that the agency operates with a court authority and that it does not unilaterally obtain information from the servers of US telecoms and Internet giants without their knowledge and a FISA Court judge approval.