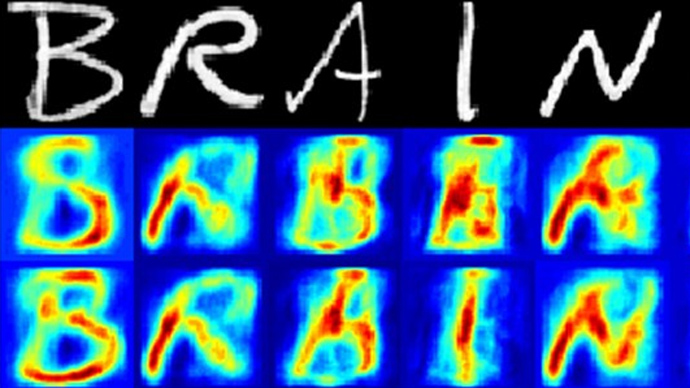

Mind reading: Scientists reconstruct letters from brain scan data

Dutch scientists have made another step towards reading people’s minds by creating a computer program, which uses brain scans to decode what a person is looking at.

A team from Radboud University Nijmegen in the Netherlands was

able to extract information from the human brain by applying a

combination of high-resolution MRI, shape recognition software

and computational modeling.

During the tests the Dutch researchers showed participants

letters B, R, A, I, N and S on screen and were able to identify

exactly when, during the scan, a person was looking at each of

those letter.

“We're basically decoding perception," Marcel van Gerven,

a co-author of the study, which is to be published in Neuroimage,

told Wired website.

Functional MRI scans are usually used to measure changes in

overall brain activity, but van Gerven’s group came up with a

different approach as decided to zoom in on smaller, more

specific regions, in the occipital lobe, known as the voxels.

The voxel has the size of 2x2x2 millimeters, while the occipital

lobe is the part of the brain, which reacts to visual stimuli,

processing what the eyes see through the retina.

The scientists were able to create a database of the specific

changes that happened in the brain after each letter appeared on

screen, which was run through an algorithm designed to work in a

similar way as the brain when it builds images of objects from

the sensory information it receives.

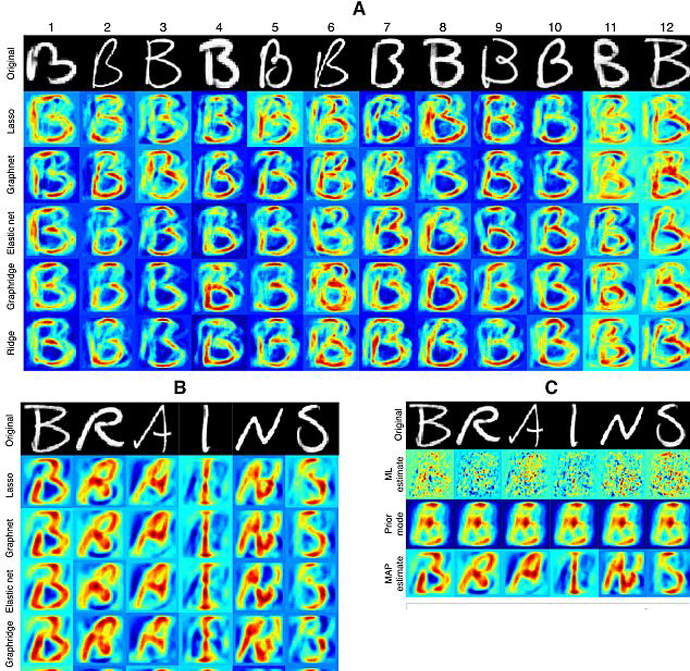

The algorithm allowed to convert the voxels and their relevant changes into image pixels, making it possible to reconstruct a picture of what the person was looking at during the scan.

Once you've taught the algorithm how the voxels respond to

stimulus, "you could reconstruct any possible inputs," van

Gerven explained.

The study called “Linear reconstruction of perceived images

from human brain activity” has used information obtained from

a selection of 1,200 voxels, but van Gerven claims the algorithm

could also be used to build images from 15,000 voxels.

This could include complex pictures, like a person's face, for

example, but require the production of improved hardware, he

said.

But the scientist pointed out that it’s still not the same as

reading a person's thoughts as it’s not known if imagining

something creates the same effect on the occipital lobe as

external stimuli does.

“Do these areas become activated in the same way if we [imagine]

a mental image?" van Gerven wondered. "It they do, then it

would be possible [to decode them].”

The Inria study, performed in Fence in 2006, hinted that it might

be true as it showed that imagined patterns could be

reconstructed using data from the occipital lobe.

Mind-reading and telepathic computer programs are “still an

open question,” van Gerven concluded.